Explanation of AI Models and Testing Image Set

The API consists of 6 high level models with over 50 total tests that each look for different indicators of image manipulation. If one or more of the models find enough evidence of potential manipulation, the image is flagged with a high tamper score. The tamper score is on a scale from 0 to 100 – we recommend that customers use a threshold of 50 for making business decisions. A score over 50 indicates a high likelihood of tampering, and the photo should be inspected further.

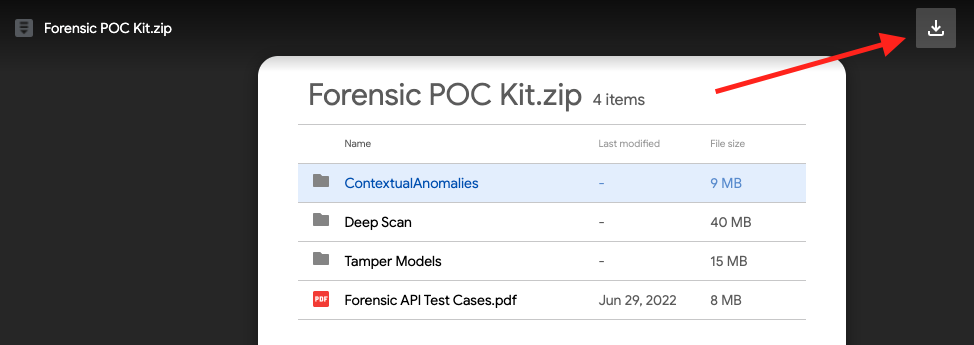

This document gives a brief description of how each model works, and how to test an image with respect to each algorithm. Example images are provided in a zip file to show how each algorithm can be triggered by examining specific tampered attributes within each file. These can be “dragged and dropped” into the Analysis tool on the dashboard or consumed via a sample workflow. These photos have been intentionally tampered to exemplify the types of manipulations that the models will find. Note that individual scores may change as the models are improved over time.

Table of Contents

Testing and Model Accuracy

Testing is performed on internally and externally sourced datasets. Each dataset consists of intentionally tampered and clean images. The platform is made to identify tampered images by running them (via drag and drop or through a workflow) in real time through six algorithms each uniquely designed to identify tampering by analyzing different image attributes. Our goal is always to improve accuracy while minimizing false positives. Fraudsters are constantly evolving new techniques for tampering of digital media. Attestiv is constantly improving the forensic algorithms and adding images to the Attestiv dataset. To ensure 99.99 % confidence in identifying tampered media it is recommended that users utilize Attestiv’s media fingerprinting for tamper prevention in combination with Attestiv tamper detection algorithms.

Model Examples

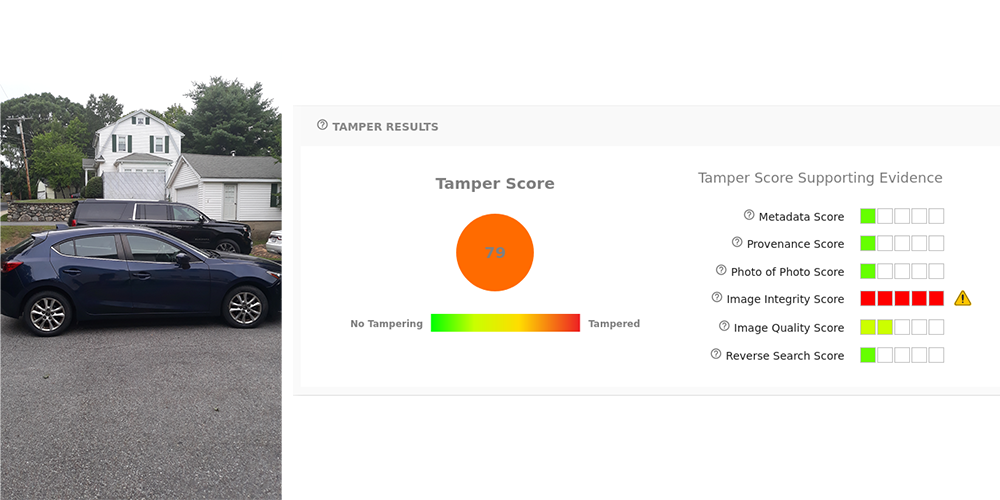

Image Integrity

The integrity model finds evidence of tampering in the image file itself. This includes checks such as mismatched file extensions and corrupted contents. To test this, take any jpeg image and change the file extension to .png. Note that the opposite test is not currently supported; the forensic API only supports jpeg images. If you send a png file, even if it has been renamed to .jpeg, it will be rejected in the API (and the dashboard will not show any forensic results). The example car.png is actually a jpeg file. In the Analysis page this inconsistency is flagged by the integrity model.

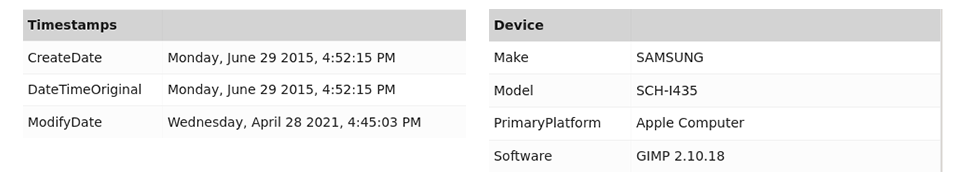

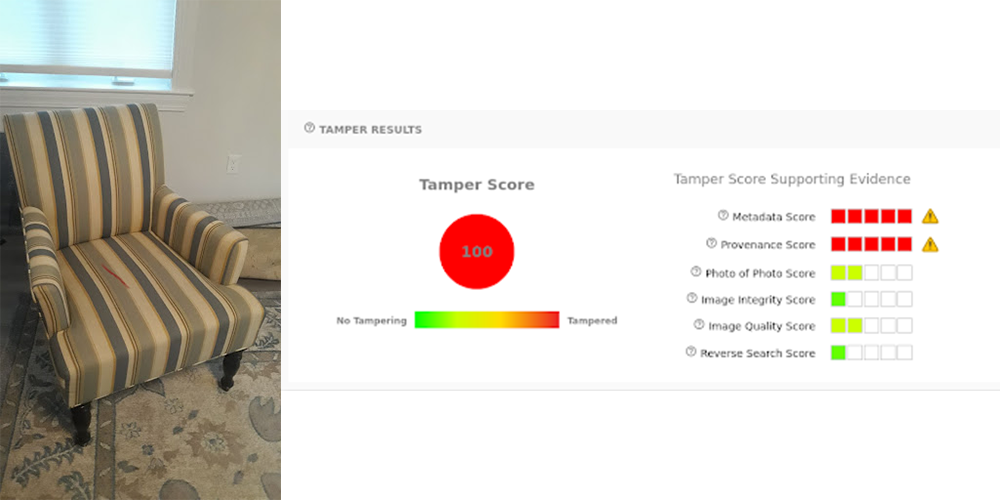

Metadata

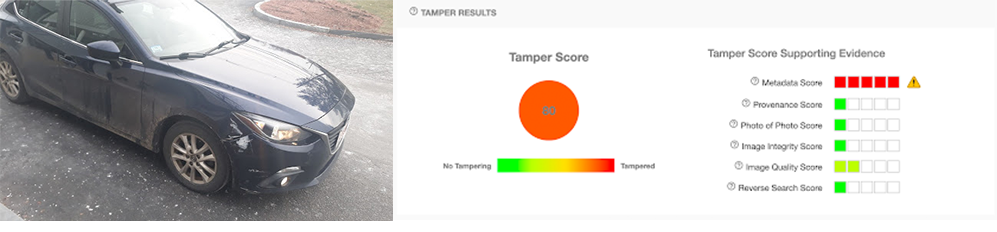

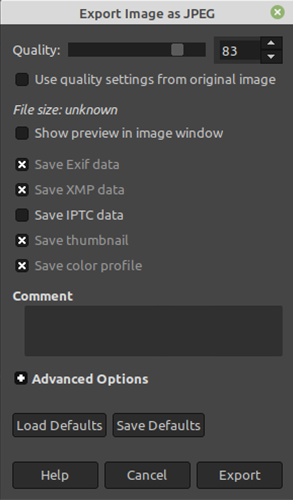

The metadata model looks for indicators of tampering in the image’s EXIF data. Many editing tools will leave traces in the metadata, and in addition fraudsters may edit this data directly to cover up their tracks. To test this, edit a photo using the GNU Image Manipulation Program (GIMP). It doesn’t matter what edits you make, provided that you use File->Export As to save a new copy. When you upload the photo to the Analysis page you can see how the metadata has been altered in the Metadata Details section. Namely, the timestamps indicate that the photo was modified after it was created, and the device section shows that GIMP was used.

In the example below, location-zero-zero.jpg has had its GPS Latitude and GPS Longitude EXIF properties changed to be 0.0, 0.0. This is a location in the middle of the ocean that cannot be resolved to an address. When we use the Analysis tool on this image it gets flagged by the metadata model because the location is questionable.

Provenance

The provenance model uses a method of “digital ballistics” to match images to the software in which they were saved (edited). If we trace the image back to an editing tool, we can be sure that the image has been manipulated in some way. Many photo editors use a common jpeg library to export images. This library is not used by any original image sources, i.e. phones or DSLR cameras. Therefore, if we trace an image back to this jpeg library, we can assume that some aspect of it is unoriginal.

In the case of GIMP, any photos that were exported with a quality lower than 90 will be matched against editing software by the provenance model. This is shown in the chair.jpg example above.

Many tools exist to manipulate metadata, but it is much harder to “hide” the image provenance. This model acts as a second layer of defense against potentially edited images.

Reverse Search

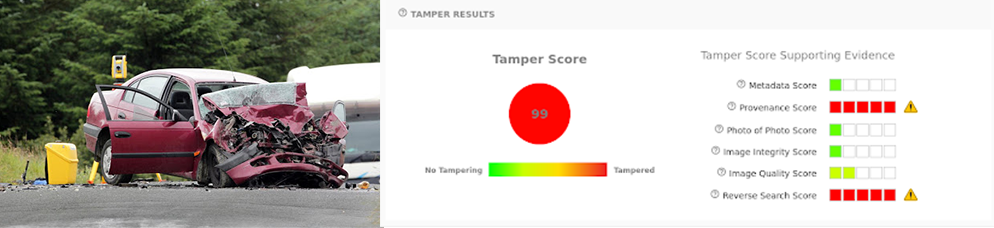

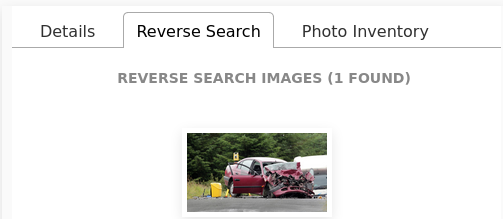

The reverse search model performs a reverse image search against a wide range of websites. If the model finds copies of the image elsewhere on the internet, it will return up to 10 links. To test this, download a photo off any major website and analyze it via our drag and drop tool or via a workflow.

The example car-crash-downloaded.jpg was saved from a Google search for car crashes. Note that the reverse search model is triggered as well as the provenance model – most images found online will have gone through some post processing, and it gives us more evidence that the photo is unoriginal.

When an image is identified by the reverse search model, we will display thumbnails that link to the other locations the image was found on the internet.

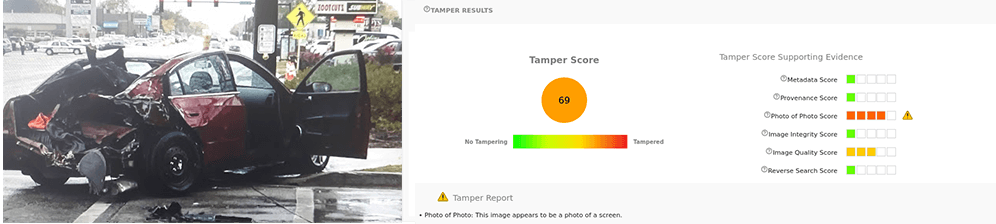

Photo of Photo (POP)

The photo of photo model identifies photos of other photos. Consider a fraudster that wants to make an insurance claim for a car crash that hasn’t happened. By lining up a camera in front of their computer screen, they can take a photo of a scene that appears to be authentic. The camera’s metadata will indicate that it was taken at the right time and place, but of course this is a fake scenario. The image car-crash.jpg is an example of this. We searched for car crashes online, opened one in full screen view, and then took a picture of the screen without any of the edges showing. This is flagged in the photo of photo model. Also note that the image quality has a score of 3 – another indication that this is really a photo of a flat screen.

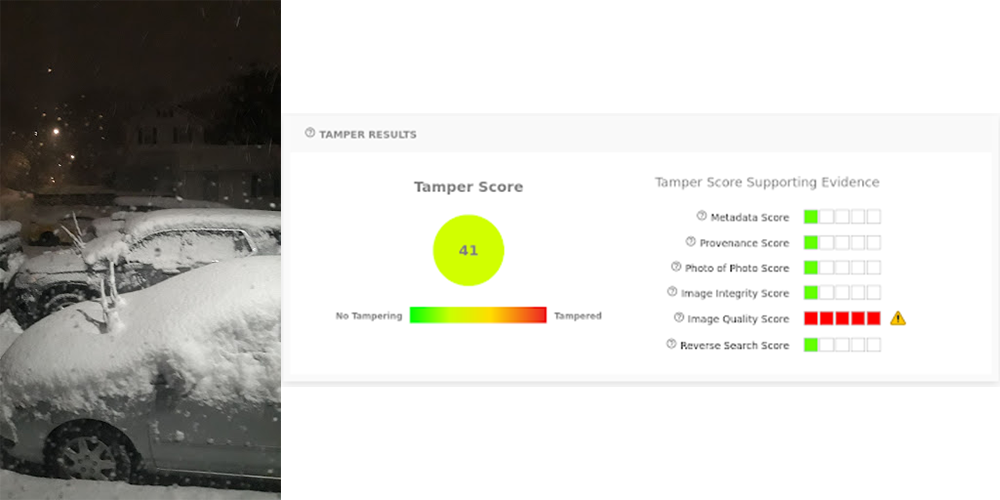

Image Quality

The quality model identifies photos that are exceptionally blurry or noisy. Attackers will often lower the quality of their images so that edits aren’t as visible. On the other hand, quality is a subjective metric that often warrants photo retakes as opposed to being a definitive indicator of fraud. You can test this model by creating a bad quality photo – snap any picture while your camera is moving around.

The example poor-quality.jpeg is a file which will result in a high quality score. When we use the Analysis tool on this image it gets flagged by the quality model because the photo is blurry and noisy. This is not enough by itself to produce a tamper score over 50, but users can leverage this information to automatically request photo retakes.

Contextual Anomalies

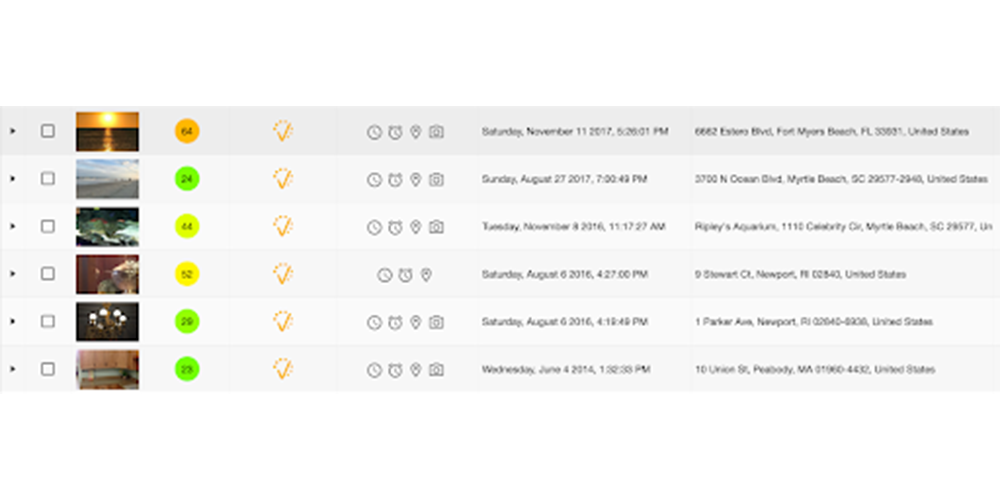

So far we have discussed models which look specifically at a single photo and some aspect(s) of it. The Attestiv platform also looks for anomalies between photos. This includes comparing related photos whose creation time or location differ by an amount greater than a configurable threshold. It also includes finding duplicate images or images which were created more than a configurable number of days ago. Finally, it also will check for related photos that were taken with different camera types. We call this group of findings “Contextual Anomalies.”

In the image above, you will see in the column to the left of the timestamp that there are a series of icons. Each of these represent a different contextual anomaly. Because these are related images but have been taken at different times, different locations, a number of years ago in the past, any by different cameras, they are flagged. Below is a description of each contextual anomaly icon.

|

The image is a duplicate within the system. |

|

The locations of two or more images are greater than the configured distance. |

|

The creation time of the image differs with the creation time of another image by an amount which is greater than the configured allowable difference. |

|

The age of the media is greater than the configured number of days. |

|

The image has been taken with a camera which is different from the other images. |

Deep Scan Pixel Analysis (BETA)

The tamper models above can be said to check for “secondary indicators” of tampering. When someone edits a photo, the file metadata and provenance may change, but this will not reveal anything about how the contents of the image have changed. To solve this, Attestiv has developed a ML model for deep pixel analysis. The deep scan looks at the noise signature of a photo – the imperceptible patterns left behind by the sensors in the camera. This pattern of noise is upset by any alteration to the image, such as blurring out detail in order to hide something, or inserting fake damage into a scene.

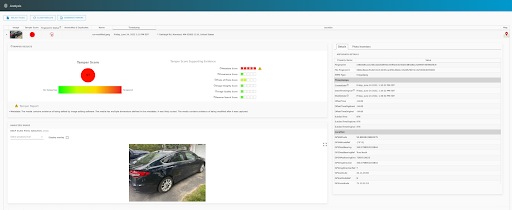

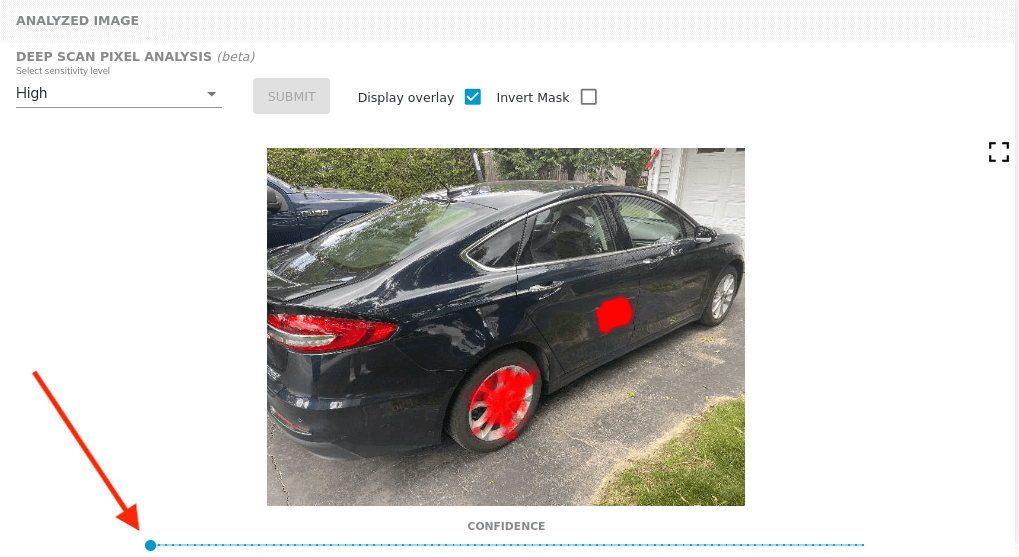

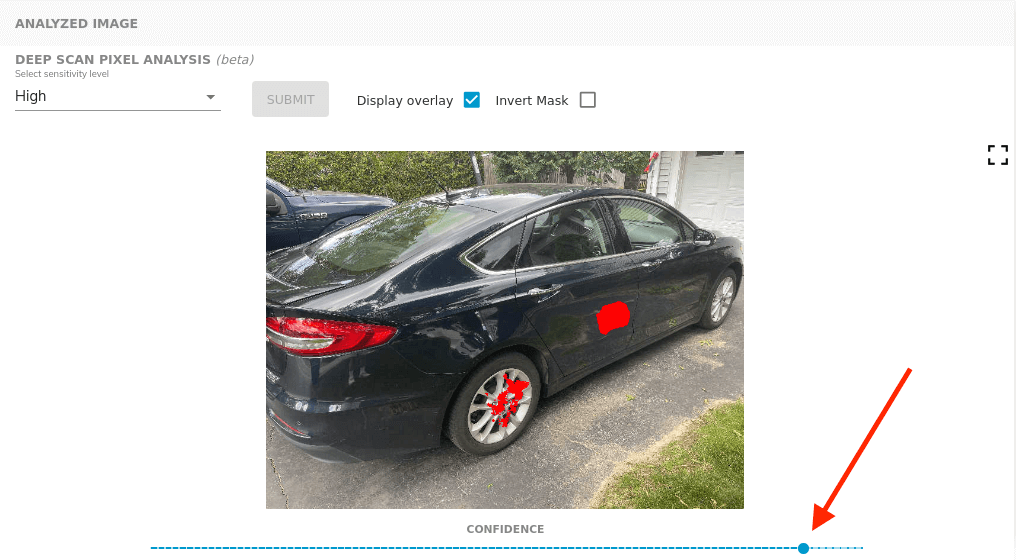

For example, the two vehicles below have been edited in GIMP. The blurring tool was used to slightly smudge regions on the body – this could have been done to hide minor existing damage. When run through the deep scan, these edits are immediately detected. You can view the files in the Deep Scan folder in the accompanying zip. Both photos are included with their original and modified versions, as well as the deep scan result.

Note that this sort of fraud analysis may require subjective interpretation on each individual image. Because the deep scan model is working with camera noise, it can be very sensitive to certain textures in photos. In particular, reflective surfaces on cars can trigger false positives – here there are no alterations near the wheels. The deep scan is in beta as we work to improve these situations, but in general these results should be seen as guidance towards identifying potential tampering, rather than indisputable proof. The dashboard provides multiple versions of the deep scan model, and you are encouraged to try them all in order to inspect the photos through various lenses. Do all the models agree when a certain region is marked red? Is this an area of the photo that someone would have reason to maliciously edit? Be sure to toggle the overlay on and off to visually inspect the area in question.

Below is a screenshot of the Attestiv drag and drop tool displaying the modified car photo. Note that the metadata model has already flagged this photo as potentially being edited. To get a closer inspection, you can initiate a deep scan in the Analyzed Image section. There are three options for the scan sensitivity – a higher sensitivity will analyze the photo at a greater depth, but it will take longer. As mentioned, we recommended trying multiple sensitivity options to see if the models tend to agree with each other.

After selecting the sensitivity option from the dropdown, the deep scan will begin and the Display overlay option will be selected automatically. When the result is ready, this toggle can be used to view the photo with or without regions being highlighted.

The Invert Mask toggle will invert the result; all clear regions will become highlighted and vice versa. In most cases this presents an image that’s almost entirely red, with some blank spots. This can make it easier to pick out areas of interest. Occasionally, there may also be results which are mostly red to begin with, and here the toggle is used to flip the notion of which regions are “suspicious”.

Finally, a slider underneath the image will change the confidence threshold. The model returns a confidence value for each pixel, which correlates to how “suspicious” it finds this region of the photo. By increasing the slider values, we eliminate the less confident pixels and cut down on any noise. In this example, setting a higher confidence threshold removes highlighting on the wheel, which has not actually been modified.

The deep pixel scan is one more tool in Attestiv’s toolbox for analyzing and detecting tampered media. When used in conjunction with the other tampering models, users can confidently detect the most common types of fraud in images. For added security, it is recommended that users also utilize Attestiv’s media fingerprinting in tandem whenever possible.