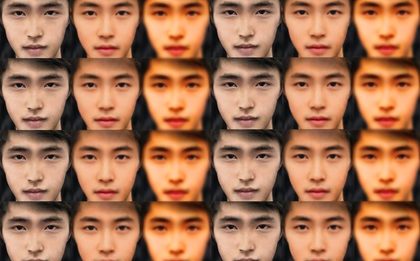

Deepfakes: the new enterprise cyberthreat vector?

CIOs can create a secure digital foundation that is resilient to deepfake media and avoid becoming the next victims of deepfake fraud.

Attack of the Deepfakes

As deepfake videos become easier to produce, the age-old cliché of ‘seeing is believing’ has been fully upended. What can be done to ensure trust?