Home > Technology

AI & Fraud Detection Technology

Industry-leading protection against altered or tampered digital media

The Attestiv Platform is built on our patented AI technology to authenticate, validate, and protect the integrity of important digital media and data.

Our technology takes any digital media (including photos, videos, documents, sensor data, telemetry data, etc.) that is captured by Attestiv apps and APIs or imported from any external source to identify if it has been altered or tampered with.

We forensically scan each item to detect anomalies giving it a tamper score. We optionally store a fingerprint on a blockchain (distributed ledger) where it cannot be changed, enabling validation at any point in the future. We offer additional analysis to help automate and simplify processing of digital media, including text extraction and object recognition.

Our Process

Intake via app or API

Analysis and tamper detection

Validation & Reporting

Automation

(optional)

Details ↓

Intake via app or API

Details ↓

Real-time analysis & tamper detection

Details ↓

Validation & Reporting

Details ↓

Automation

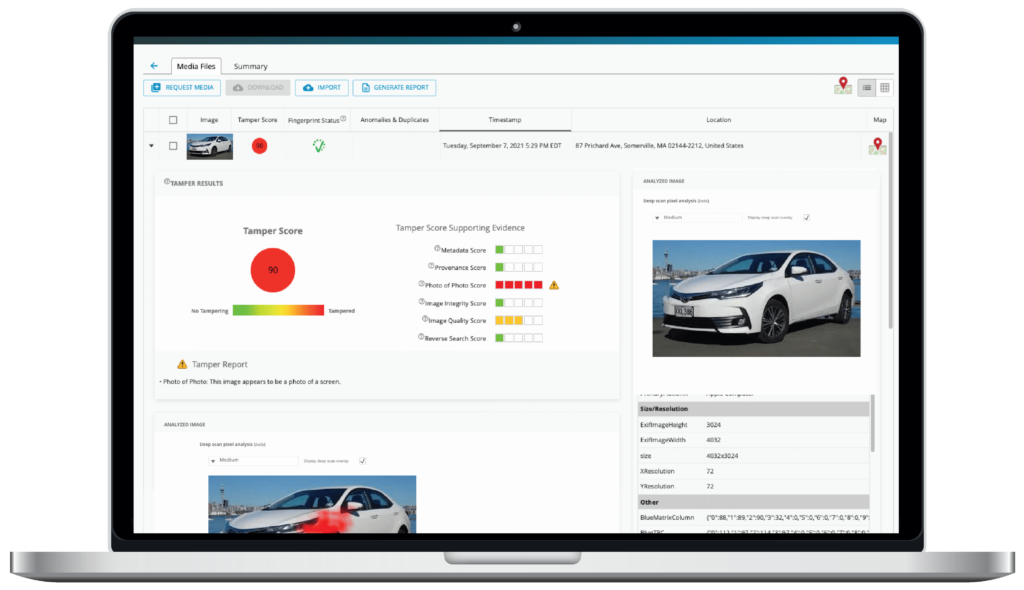

Tamper Scoring System

Every item that gets analyzed by our system is given a score ranging between 1 to 100. Items scoring lower on the spectrum are considered to be trustworthy and need no further review.

We also group and analyze related images into an Analysis Report that summarizes and calls out areas needing review.

Our AI models explained

Images

Many editing tools leave traces in an image’s metadata. Additionally, bad actors may directly edit this data in an attempt to cover their tracks. This model searches through the image metadata for signs of this kind of tampering.

Documents

The metadata model looks for indicators of tampering in the scanned document’s EXIF data. Many editing tools will leave traces in the metadata, and in addition, fraudsters may edit this data directly to cover up their tracks.

Images

The provenance model matches images to the software in which they were saved, possibly after editing. If we trace the image back to an editing tool, like Photoshop, we can feel justified in being a little suspicious of that image.

Documents

The provenance model matches the scanned document to the software used to save the edits. If the scanned document can be traced back to an editing tool, then it indicates that the scanned document has been manipulated in some way.

Images only

The photo of photo model identifies photos of other photos or screens (television, computer monitors, etc.)

Images & Documents

The integrity model finds evidence of tampering in the image file itself. This includes AI-generated content, mismatched file extensions and corrupted contents.

Images only

The quality model identifies photos that are exceptionally blurry or noisy.

Images only

The reverse search model performs a reverse image search against a wide range of websites.

Documents Only

This model highlights any text alterations it can find in the document using its deep-learning algorithms. It calculates a graded score based on the model’s confidence in its findings.

Videos only

This model checks for AI-generated content and graphics

Videos only

These models check for facial replacement or lip synching

Features

Patented technology

Attestiv makes extensive use of AI to perform media analysis, categorization, text extraction, and authentication. The easy to understand scoring system breaks down results in categories with a easy to understand description of potential manipulations.

Enterprise-ready

Attestiv is designed to meet enterprise security and compliance requirements for regulated industries, offering highest levels of data encryption at rest and in flight, APIs that purge all data after analysis, and ACLs to securely manage and administer settings for your organization.

Tamper-proof photos & videos

As data is captured from cameras or imported from digital media libraries, Attestiv captures data and metadata from the digital media and optionally stores a unique “fingerprint” in an encrypted, tamper-proof distributed ledger.

Optimized User Experience

Whether your goal is to save time and money on media inspection or to prevent fraud and cyberthreats, Attestiv solutions will meet your user experience goals while helping you automate the handling and validation of digital media.

Demo Videos

Attestiv Products, Solutions & Technology

Scalable. Secure. Compliant.

From the Blog

Pixels to Proof: Deepfake Forensics for Fraud‑Free Insurance Claims

Melanie Quandt interviews Nicos Vekiarides on the threat of deepfakes and role of human review as part of the overall process.

Introducing Attestiv’s Free Tier for Journalists and Media: Defend Truth with Deepfake Detection

Attestiv introduces a free starter tier, providing deepfake detection for journalists, fact-checkers, and media professionals.

5 Ways Insurance Companies Save Money with Automated Deepfake & Media Fraud Detection

For insurance, there are more benefits to automated deepfake & media fraud detection than meets the eye