Our 50th software release ushers in exciting new technology

People say that every photograph tells a story. Here at Attestiv, we can always find at least two.

First, there’s the visual story in the picture. We’re used to talking about that: the objects, the people, the world we see.

Second, there’s the story of where the picture came from. Our tools go deeper than what’s visible to find the imperceptible traces and patterns left behind by the creation process, from the sensors on the camera all the way to the publishing software.

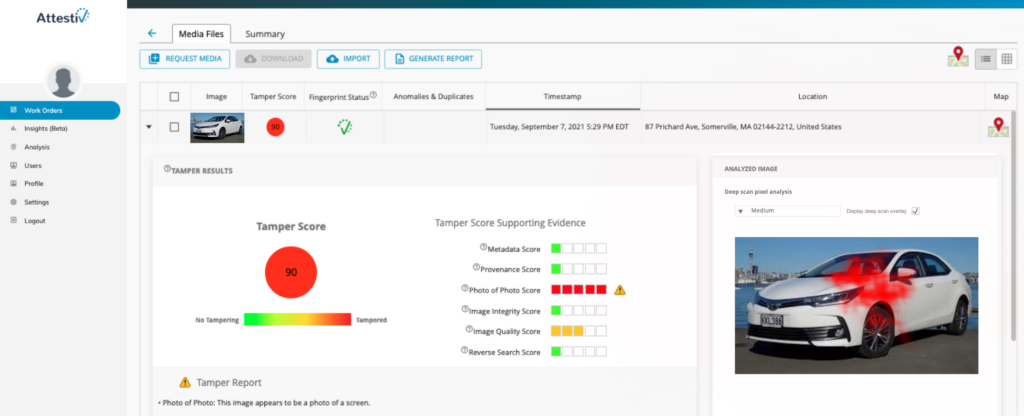

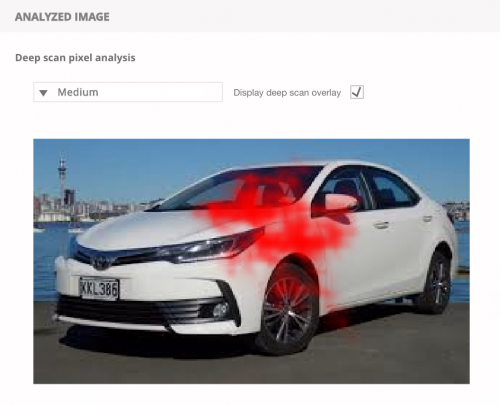

Deep Scan Pixel Analysis

No camera takes a perfect picture. Every camera adds its own texture: an almost invisible pattern of systematic noise introduced by fluctuations in the electromagnetic signals during the creation of the photograph.

Our tools identify those textures within a photograph and use them to read the deep story: was this picture all taken by the same camera? Do all the textures match? Are they all aligned the same way? Have they been changed?

Utilizing an attention-based machine learning model, we have been able to understand the different ways that those textures are affected by image manipulation. The model does this by focusing on smaller details, learning which part of the data is more important than another in which contexts(1).

When we are given a photo to evaluate, we can scan through that photo looking at the constituent textures and locate and isolate where they show signs of manipulation or transplantation.

In our 50th software release, we are debuting our beta version of our Deep Scan Pixel Analysis.

Example:

This “cat on a track” is a photo that has been manipulated to add the cat to the original image.

Below we see the Deep scan pixel analysis results across the three different sensitivity levels available.

Interested in seeing it in action?

(1) https://en.wikipedia.org/wiki/Attention_(machine_learning)